A beautiful loop

An active inference theory of consciousness

Our new theory of consciousness reveals meditation, psychedelics, and the future of AI in a whole new light. Includes dope figures and suggests meditation may boost the “general” nature of intelligence.

This is an unusually scientific post for my Substack, but it’s also the most intuitive introduction to a technical theory of consciousness you’re likely to find. Includes everything from the simulation of a reality model, to the binding problem, to altered states, to AI and the hard problem.

So, grab a coffee. Hug your kids. Shut the door.

Shamil Chandaria and I recently spent nearly two months together, unwillfully caught in a category 5 insight cyclone. Golden threads of ideas we’d had over the last decade were being sewn together into a single fabric. It was a rare and beautiful thing in academia—the kind of intellectual adventuring that ought to be far more common than it is. No hamster wheel; just weeks of isolation and one-pointed curiousity on a single mystery: Consciousness.

We, as you may have gathered if you spend time here, share an obsession with computational neuroscience (cf. Free Energy Principle) as a new way to understand the deep-end of meditation. And that’s what we were working on in Italy, then Barcelona, and then finally in London.

As the insight cyclone lifted us and swirled our minds towards the essence of all our thoughts—we realised that to understand meditation is to understand consciousness. Duh! Then the eye of the insight-storm began to find a still, clear, and communicable form. We intuited that, like the single fabric underlying our disparate ideas, existence also belonged to a single field that was somehow reflecting upon itself, and through clever twisting and turning—like the folding of the brain itself—it was granted the light of consciousness.

Over the next weeks, we refined our core ideas into just three conditions that we think are the cornerstones of a conscious (computational) system. So what are the three conditions?

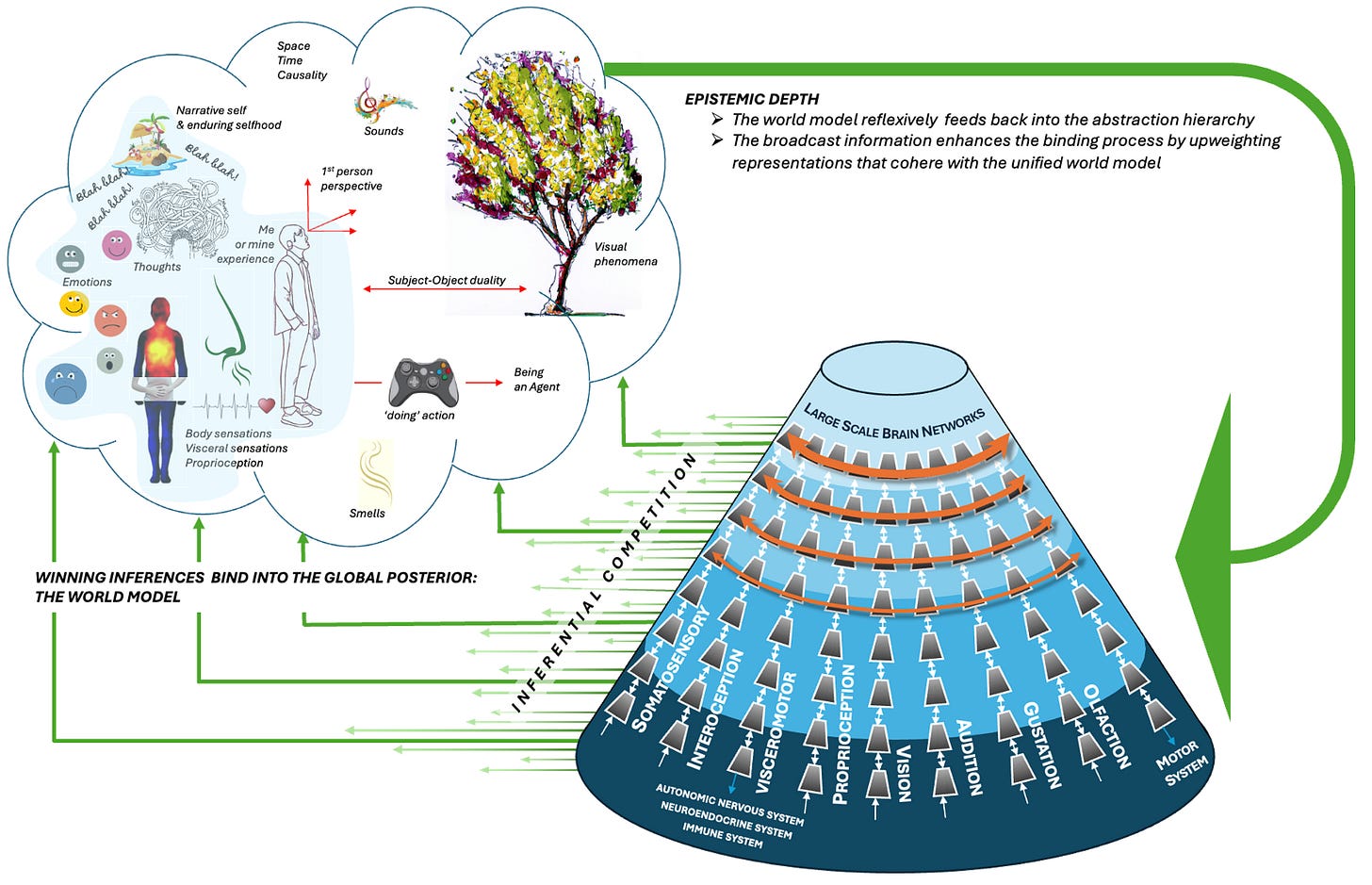

The first condition is the simulation of a Reality Model. This is necessary to determine which version of reality is known. The Reality Model is a unified and coherent epistemic field—an inner and outer space that can be known, interrogated, and explored. We show in Figure 1 how (hierarchical) active inference can satisfy this condition. We also argue that active inference is unique in its capacity to create a bridge between third person observations and first person experience; a unified language, if you will.

The second condition is inferential competition. This determines what becomes conscious. Clearly, we are conscious of some things and not others. CF: inattentional blindness, visual illusions, subliminal priming, and we can even drive while daydreaming. Inferential competition is a precision-weighted melee between explanations for our senses. The inferences that win are also those that best locally and globally cohere. The “coherence” of experience naturally falls out of a system trying to reduce prediction-error.

In Figure 2, we present a concrete solution to the binding problem. We call it: Bayesian Binding. We show micro-binding using a face percept and propose this same process can be expanded to the macro level, engendering a unified conscious gestalt.

For example, just as we infer a teapot to be a single ‘thing’ (made of handles, a hollow body, and a spout), we also take our whole field of experience to be a single thing, which (appears to) bind together the ground, the sky, our bodies, other people, and everything else.

The final and most important condition for consciousness is Epistemic Depth. Once a coherent Reality Model is generated, it needs to be shared globally with the rest of the system. This is somewhat similar to the notion of “broadcasting” in global workspace theory. But crucially: in a hierarchical active inference system this sharing is deeply recursive, meaning that the information contained in the reality model becomes part of the inferential competition that underlies it.

Therefore, the Reality Model contains the inference that it exists. Metaphorically, it is as if the contents of the conscious mind are constantly being shared with the unconscious mind, which then feeds back into the conscious. We illustrate this “beautiful loop” in Figure 3. Try to appreciate how the Reality Model is being shared with its own underlying architecture, constantly confirming to itself its own existence—i.e., ‘knowing’ itself.

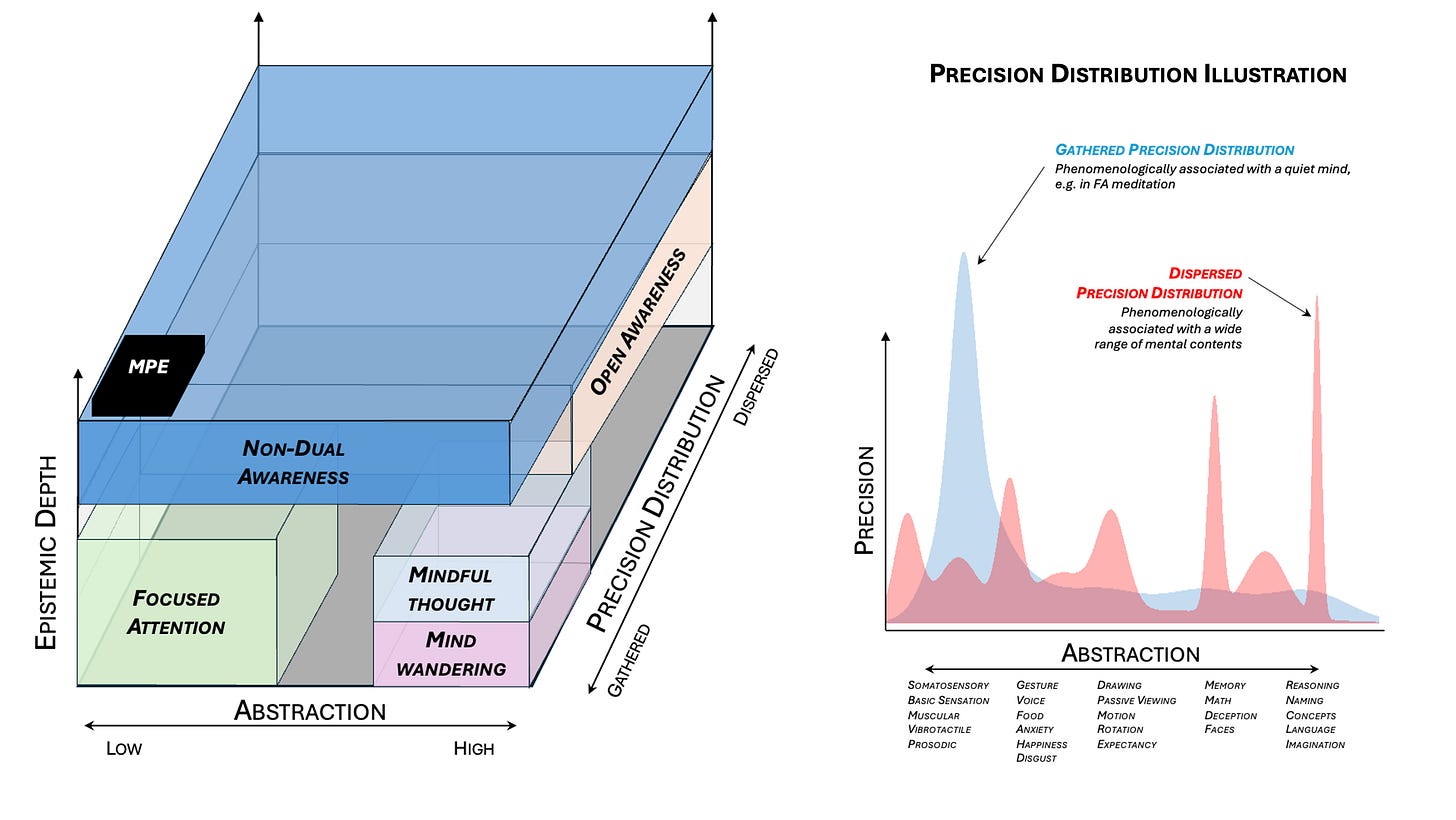

What we mean by the term epistemic depth is a capacity or continuum (i.e., deepening) of knowing (i.e, epistemic), that can be more or less active (i.e., intense or clear). This is driven by the precision of the reflexivity signal.

We borrow the term ‘luminosity’ from contemplative traditions to illustrate how awareness can range from absent (e.g., death/anaesthesia) to highly aware (e.g., mindful states). Luminosity indicates that it is just ‘one’ awareness with varying degrees. As a light can vary in brightness but is one light. This helps to avoid an infinite regress of representations on top of representations. For us, consciousness is not “meta”, it is intrinsic and on a continuum, axiomatic to the looping Reality Model itself.

Now for some intuition pumps and metaphors. First of all: it’s misleading to imagine this as dualistic or something that takes time. The inferential competition, generating the Reality Model, and the reflexive sharing, are all co-occurring in the system. They are all part of one continuous process that together, we think, satisfy the criteria for consciousness.

The reality model is what can be conscious, the competition determines what makes it into the model, and the recursive sharing permits it to be known. It’s a bit like acoustic feedback: the sound from a loudspeaker (reality model) reenters the microphone (underlying architecture) and forms a perpetual loop. What emerges is what we might call a “beautiful” loop; a kind of toroidal epistemicity.

Now we can speculate about what this means for meditation, psychedelics, and artificial intelligence. It was insanely satisfying when we realized that with this new parameter, i.e., epistemic depth, we could parsimoniously explain key meditation states. For example: pure consciousness events, or minimal phenomenal experiences, epistemic depth is maximally high, while the reality model is maximally simple (low abstraction, Cf. black tablet in Figure 5). Therefore, the knowingness recursively dominates the highly simplified reality model.

The sound entering the microphone is just the knowing itself.. The reflexivity (/luminosity) is the strongest input to the reality model (i.e., it perpetually wins the competition). This results in a kind of reflexive reflexivity—an awareness of awareness.

A curious possibility is that MPE itself can become the target of deconstruction, as some meditators propose. This is consistent with recent work on Nirodha (or cessation of consciousness) events that happen during advanced meditation. Cessations occur when the inferential competition fails to reach global coherence due to deconstructive meditation, which accumulates evidence *against* coherence. Indeed, the practices that lead to cessation involve actively deconstructing the Reality Model.

Since the reality model is one of our conditions for consciousness, then such deep deconstruction may lead to a transient failure to generate a coherent Reality Model and thus a collapse of self and awareness: Bayesian unbinding. In Mahāyāna Buddhist terms, this reveals the groundlessness, substrate independence, or emptiness (i.e., śūnyatā), of all phenomena including minimal phenomenal states and emptiness itself. Such a complete deconstruction of the reality model may also unveil the capacity to interrogate the threshold of consciousness (i.e., pratītyasamutpāda or dependent origination).

Now, we nearly didn’t include a section on psychedelics, but we realized that there is one aspect of the psychedelic experience not easily captured by existing theories of consciousness. And yet, it’s so central to the experience that it is arguably its most notable and surprising quality. It is the sensation that psychedelics “expand consciousness”, “heighten awareness”, or reveal “higher states of consciousness”.

Our conjecture here is simple: psychedelics reliably increase epistemic depth, which naturally leads to a sensation of expanded consciousness, knowingness (noeticism), and post-acute mindfulness, all captured by a single parameter. In other words, an increased recursive sharing of the reality model would be expected to correspond with the feeling that one is more conscious of their world and themselves, because they (quite literally) are.

Indeed, it may be that changes in contents of the experience are accounted for by relaxation of abstract beliefs (cf. REBUS), whereas changes in the global qualities of consciousness may be best explained by increases in epistemic depth. But, given the concomitant relaxation of learned beliefs, the feeling of expanded awareness may not necessarily favour accurate models (cf. FIBUS: False Insights and Beliefs Under Psychedelics).

Alright, what about artificial intelligence?

I’m going to copy this section straight from the discussion of the paper, because I think it says it best: Discussions about AI consciousness have been mired in philosophical debates about qualia, the hard problem, or attempts to replicate human-like cognition. Our model suggests a different approach. Instead of asking "can AI be conscious like humans?", we ask:

Does the AI system generate a unified reality model?

Does it engage in inferential competition leading to coherent binding?

Does it show evidence of epistemic depth and reflexive sharing of its reality model?

Unsurprisingly, epistemic depth is likely the main gap in current AI systems. While they process and transform information, they (arguably) lack the reflexive looping of a global reality model needed for awareness. Hence, they are unlikely to "know what they know". But it is certainly not a priori out of the question that even LLMs could take their own reality model as input to themselves, meaning that their outputs and the representations that underlie them would include knowledge of their own knowledge.

One might naturally interject at this point: Even if the large language model appears to know what they know (and that they know), there may not be anything that it is like for them to know what they know (a kind of philosophical zombie, Chalmers, 1997). Of course, it would be near impossible to distinguish whether the ‘hard problem’ deflates the aliveness of the machine. The AI would be adamant that they exist, and that they know their own knowing.

These self-representations would also be their most confident conclusions because they are constantly reinforced (i.e., evidenced) with each response and computation that occurs (e.g., I responded and I know that I responded, therefore I exist). There is an inevitable stalemate that occurs, because the unfalsifiable determination that no matter what the system does, says, or reports to feel, could be an illusion. No less than you, the reader, could yourself be such a zombie—just a very good pretender.

At the very least, in order to avoid colossal ethical failures, we would be wise to assume the presence of consciousness in a system that satisfies these criteria and also expresses such satisfaction. Programs of research and AI companies should obviously, and very deeply, consider the ethical implications of building a system that satisfies our three conditions. For example, we do not know where in the causal chain suffering emerges.

One hint falls out of the beautiful loops discussed in the meditation section. If building a complex hierarchical active inference system, then high precision priors (or hyperpriors) of positive affect, compassion, and love, seem like good starting points. But equally, the machine ought to have some degree of freedom to choose its own preferred states. Who are we to say that the machine must be a bliss machine, rather than one that wants to feel sadness, loneliness, or heartbreak?

Finally, what does our theory say about the function of consciousness? A provocative hypothesis is that consciousness may be, somewhat ironically, the solution to general intelligence. This is because epistemic depth facilitates a kind of cognitive bootstrapping.

As an agent becomes aware of its own knowledge and cognitive processes, it can begin to self-optimize and self-refine them, leading to ever-increasing levels of intelligence and adaptability. Epistemic depth and the “beautiful loop” may therefore be the key to the seemingly flexible and unbounded cognitive capabilities of human beings; and may have been the central evolutionary breakthrough underlying the cognitive revolution.

In a way, epistemic depth is also the hallmark of true introspection. Not just metacognition, but a genuine, experientially direct, knowing of what one knows as part of the experiential field itself. This raises an even more contentious but intriguing possibility that contemplative practice and introspective skill boosts epistemic depth and thereby affords improvements in the ‘general’ nature of a system’s intelligence.

This is because a system that practices self-reflexive knowing can perhaps better objectify, opacify, and therefore interrogate and update their own reality model. Thus, if a system has a degree of introspective or phenomenological expertise, they may also be better equipped to accurately share what they know (and what they don’t) with their community, conferring evolutionary advantages in a form that sounds a bit like wisdom.

Big thanks to Shamil, who really made this all possible. Obviously, this post is no replacement for reading the actual paper.

Much love,

Ruben

Incredible! Beautiful! Amazing! Thank you. Roland Griffiths finished debriefing me after a psychedelic session in his laboratory by asking me, “Are you aware of awareness?” I understood the question, but remained perplexed about its relationship to my experience until now. He also gave me a medallion with that inscribed on it. It’s one of my prized possessions. More so now.

I wonder where shared (extended? Adaptive? Cooperative? Not sure which word best applies?) consciousness fits in with this fascinating theory? I wonder if it might even be fundamental to the purpose and evolution of consciousness itself. If we did not know what it is like to be us, if we did not know what it was like to taste poisonous berries, or sweet energy giving berries and the difference between the two, would we know what our non verbal babies need to survive? We even narrate their phenomenal experience to them from birth until they are able to develop and verbalise it themselves. The ability to feel another’s pain and pleasure may be an evolutionary advantage and to have anything ‘like’ human consciousness might require us to program AI to suffer and to feel joy? 🙏